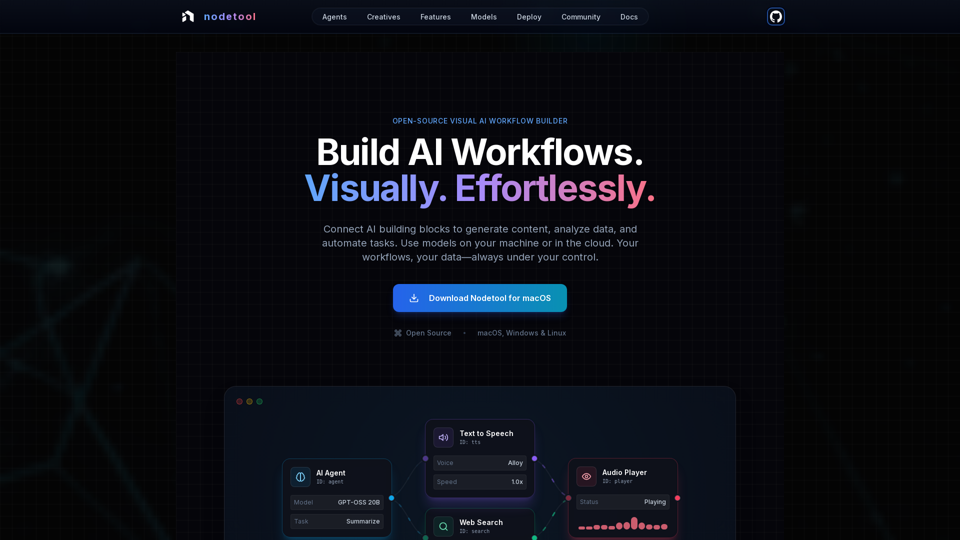

Product Features of Nodetool AI

Overview

Nodetool AI is an open-source, local-first, visual AI workflow builder for creating advanced LLM agents, RAG pipelines, and multimodal data flows. It combines node-based interfaces with powerful automation capabilities, specifically tailored for AI tasks, emphasizing privacy, local execution, and user control.

Main Purpose and Target User Group

- Main Purpose: To provide a unified, visual, and code-free platform for building, running, and deploying complex AI workflows, from generative AI and media pipelines to autonomous agents and document intelligence.

- Target User Group: AI developers, data scientists, researchers, creatives, and anyone looking to build and automate AI applications without extensive coding, with a strong preference for privacy, local control, and open-source solutions.

Function Details and Operations

-

Visual Workflow Automation:

- Drag-and-drop canvas for orchestrating LLMs, data tools, and APIs.

- Type-safe connections ensure workflow integrity.

- Real-time execution monitoring and step-by-step inspection for transparency and debugging.

-

Generative AI & Media Pipelines:

- Connect nodes for video generation, text analysis, image creation, and multimodal processing.

- Supports models like Flux, SDXL, OpenAI Sora, Google Veo, and custom models.

-

Local-First RAG & Vector DBs:

- Index documents and build semantic search pipelines entirely on the local machine.

- Integrated ChromaDB for RAG.

-

Autonomous AI Agents:

- Build agents capable of web searching, browsing, tool usage, and multi-agent systems for complex task solving.

-

Open Source & Privacy-First Architecture:

- AGPL-3.0 licensed, allowing inspection, modification, and self-hosting.

- Local-first processing ensures data privacy; no collection, no telemetry, opt-in cloud only.

- Supports offline operation once models are downloaded.

-

Deployment Flexibility:

- Run workflows locally for maximum privacy or deploy to cloud services (RunPod, Google Cloud, custom servers).

- One-command deployment (

nodetool deploy) to provision infrastructure, download models, and configure containers. - Scales to zero, paying only for active inference time.

-

Universal Model Support:

- Local Inference:

- MLX (Apple Silicon optimized for LLMs, audio, image generation).

- Ollama (easy setup for local LLMs).

- llama.cpp (cross-platform GGUF model inference on CPU/GPU).

- vLLM (production-grade serving with PagedAttention).

- Nunchaku (high-performance for Flux, Qwen, SDXL).

- Cloud Providers:

- Direct API key integration for OpenAI, Anthropic, Gemini, OpenRouter, Cerebras, Minimax, HuggingFace, Fal AI, Replicate, Kie.ai.

- Mix and match providers within a single workflow.

- Model Management: Search, download, and manage model weights from HuggingFace Hub directly within the app.

- Local Inference:

-

Multimodal Capabilities:

Process text, image, audio, and video within a single workflow. -

Extensive Building Blocks:

1000+ ready-to-use components for AI models, data processing, file operations, computation, control, and more. -

Chat Assistant:

Interact with and run workflows through natural conversation. -

Built-in Asset Manager:

Centralized management for images, audio, video, documents (PNG, JPG, GIF, SVG, WebP, MP3, WAV, MP4, MOV, AVI, PDF, TXT, JSON, CSV, DOCX).

User Benefits

- Accessibility: Build complex AI workflows without coding, making advanced AI approachable for everyone.

- Transparency & Debuggability: Real-time execution and step-by-step inspection provide full visibility and confidence in workflow operation.

- Privacy & Control: Keep data and models local, ensuring maximum privacy and ownership.

- Flexibility: Run workflows locally or deploy to the cloud, using a wide range of local and cloud models.

- Cost-Efficiency: Leverage local inference to reduce cloud costs and scale to zero for deployed workflows.

- Versatility: Create a wide array of AI applications, from agents and RAG to creative pipelines and data processing.

- Community Support: Open-source nature fosters community contributions, shared workflows, and direct access to developers.

Compatibility and Integration

- Operating Systems: macOS, Windows, Linux.

- Hardware Requirements: Nvidia GPU or Apple Silicon M1+ and at least 20GB free space for model downloads.

- Cloud Deployment: RunPod, Google Cloud, and custom servers.

- API Integrations: OpenAI, Anthropic, Gemini, OpenRouter, Cerebras, Minimax, HuggingFace, Fal AI, Replicate, Kie.ai.

- Local Model Support: MLX, Ollama, llama.cpp, vLLM, Nunchaku.

Access and Activation Method

- Download: Available for direct download on macOS, Windows, and Linux from the official website.

- Open Source: Users can inspect, modify, and self-host the entire stack as it's open-source under AGPL-3.0.